HIVE: Harnessing Human Feedback for Instructional Visual Editing

1. Salesforce AI

2. Stanford University

3. Northeastern University

*Denotes equal contribution

Abstract

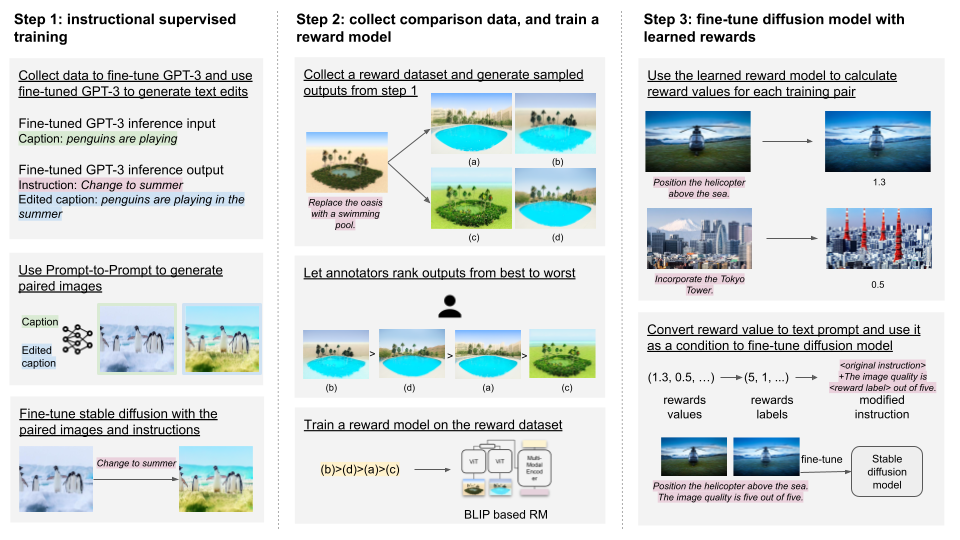

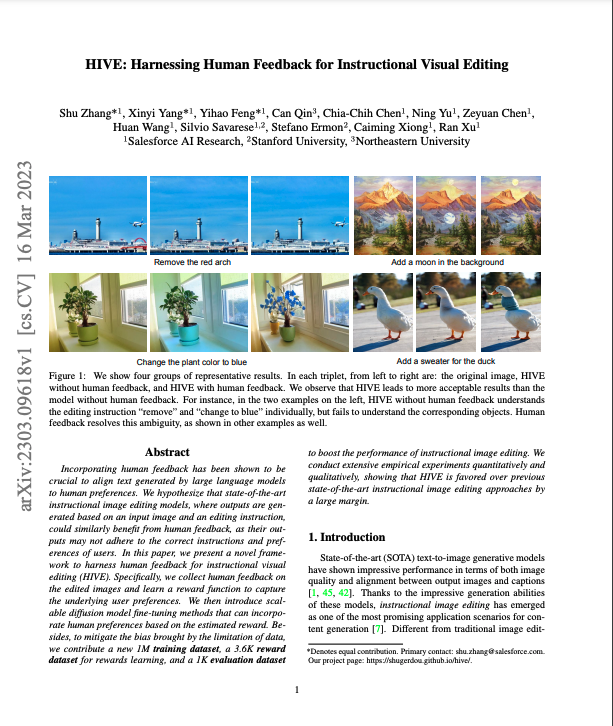

Incorporating human feedback has been shown to be crucial to align text generated by large language models to human preferences. We hypothesize that state-of-the-art instructional image editing models, where outputs are generated based on an input image and an editing instruction, could similarly benefit from human feedback, as their outputs may not adhere to the correct instructions and preferences of users. In this paper, we present a novel framework to harness human feedback for instructional visual editing (HIVE). Specifically, we collect human feedback on the edited images and learn a reward function to capture the underlying user preferences. We then introduce scalable diffusion model fine-tuning methods that can incorporate human preferences based on the estimated reward. Besides, to mitigate the bias brought by the limitation of data, we contribute a new 1M training dataset, a 3.6K reward dataset for rewards learning, and a 1K evaluation dataset to boost the performance of instructional image editing. We conduct extensive empirical experiments quantitatively and qualitatively, showing that HIVE is favored over previous state-of-the-art instructional image editing approaches by a large margin.

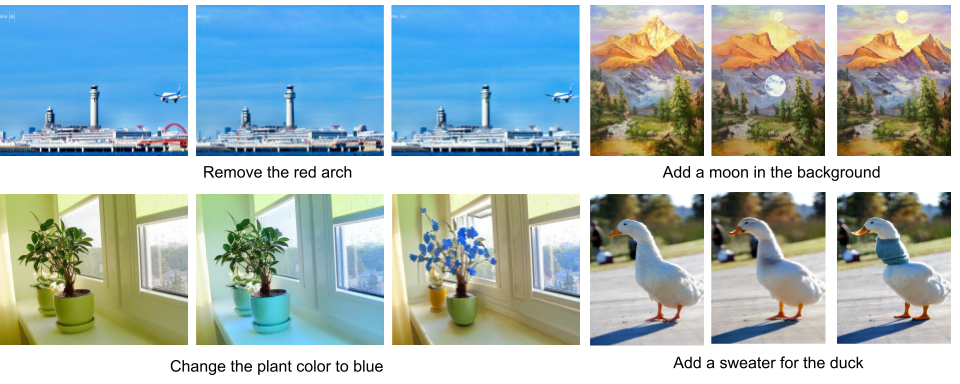

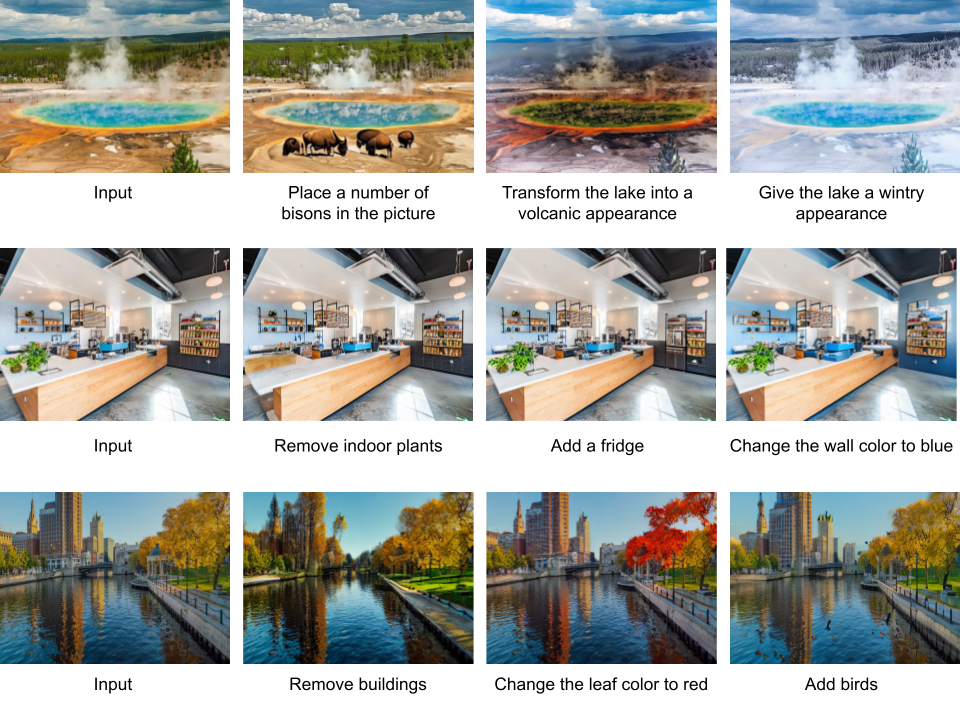

Results

From left to right: Input image, HIVE without human feedback, HIVE with human feedback.

More results.

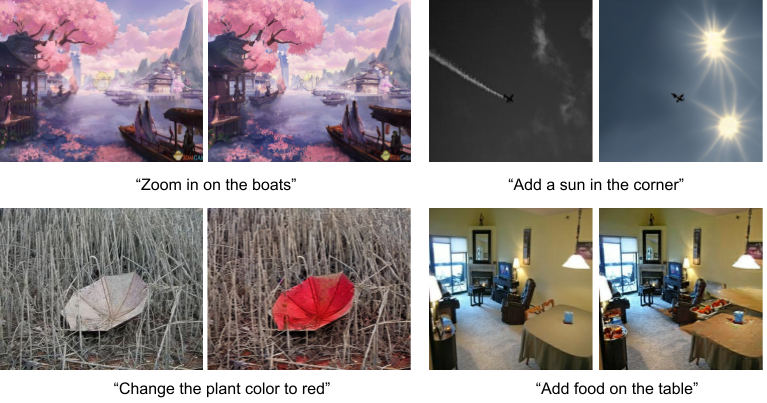

Failure cases.

Paper

Paper |

Citation

@inproceedings{zhang2022hive,

author = {Zhang, Shu and Yang, Xinyi and Feng, Yihao and Qin, Can and Chen, Chia-Chih and Yu, Ning and Chen, Zeyuan and Wang, Huan and Savarese, Silvio and Ermon, Stefano and Xiong, Caiming and Xu, Ran},

title = {HIVE: Harnessing Human Feedback for Instructional Visual Editing},

journal={arXiv preprint arXiv:2303.09618},

year = {2023}

}

Acknowledgement

We thank the paper InstructPix2Pix, and our code is built upon it.

A subset of our evaluation data is from EditBench Dataset